Daniel Hershcovich

University of Copenhagen

compositional generalization

semantics

ucca

meaning representation

amr

legal reasoning

semantic parsing

logical reasoning

meaning representations

evaluation

pragmatics

language models

parsing

natural language processing

cross-lingual transfer

21

presentations

6

number of views

SHORT BIO

Daniel Hershcovich is a Tenure-Track Assistant Professor at the NLP section, Department of Computer Science, University of Copenhagen, Denmark. He did his PhD at the Hebrew University of Jerusalem with Ari Rappoport and Omri Abend on meaning representation parsing. During 2008-2019, Daniel was also at Project Debater, IBM Research. His research interests include cross-cultural and cross-lingual adaptation of (and investigation with) NLP models, interpretable representation of natural language syntax, semantics and pragmatics, and promoting welfare, sustainability and responsible behavior through language technology.

Presentations

Probing for Hyperbole in Pre-Trained Language Models

Nina Schneidermann and 2 other authors

What does the Failure to Reason with "Respectively'' in Zero/Few-Shot Settings Tell Us about Language Models?

Ruixiang Cui and 3 other authors

On Evaluating Multilingual Compositional Generalization with Translated Datasets

Daniel Hershcovich and 1 other author

What's the Meaning of Superhuman Performance in Today's NLU?

Simone Tedeschi and 11 other authors

Cross-Cultural Transfer Learning for Chinese Offensive Language Detection

Li Zhou and 3 other authors

Towards Climate Awareness in NLP Research

Daniel Hershcovich

Compositional Generalization in Multilingual Semantic Parsing over Wikidata

Ruixiang Cui and 3 other authors

Can AMR Assist Legal and Logical Reasoning?

Nikolaus Schrack and 3 other authors

A Dataset of Sustainable Diet Arguments on Twitter

Daniel Hershcovich

Evaluating Deep Taylor Decomposition for Reliability Assessment in the Wild

Stephanie Brandl and 2 other authors

Challenges and Strategies in Cross-Cultural NLP

Daniel Hershcovich and 13 other authors

Can Language Models Encode Perceptual Structure Without Grounding? A Case Study in Color

Mostafa Abdou and 5 other authors

A Multilingual Benchmark for Probing Negation-Awareness with Minimal Pairs

Mareike Hartmann and 6 other authors

Great Service! Fine-grained Parsing of Implicit Arguments

Ruixiang Cui and 1 other author

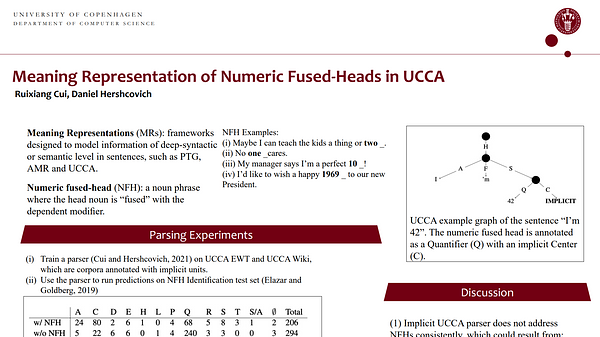

Meaning Representation of Numeric Fused-Heads in UCCA

Ruixiang Cui and 1 other author

Parsing, Evaluation and Applications

Daniel Hershcovich